We have a very special speaker coming to ScyllaDB Summit 2017 to talk to you about Stateful Streaming Applications for Spark. His name is Burak Yavuz and he works for Databricks. He will be one of many brilliant minds speaking about big data and NoSQL technologies at the summit. Let’s begin the interview and learn more about Burak’s background and his upcoming talk.

Please tell us about yourself and what you do at Databricks?

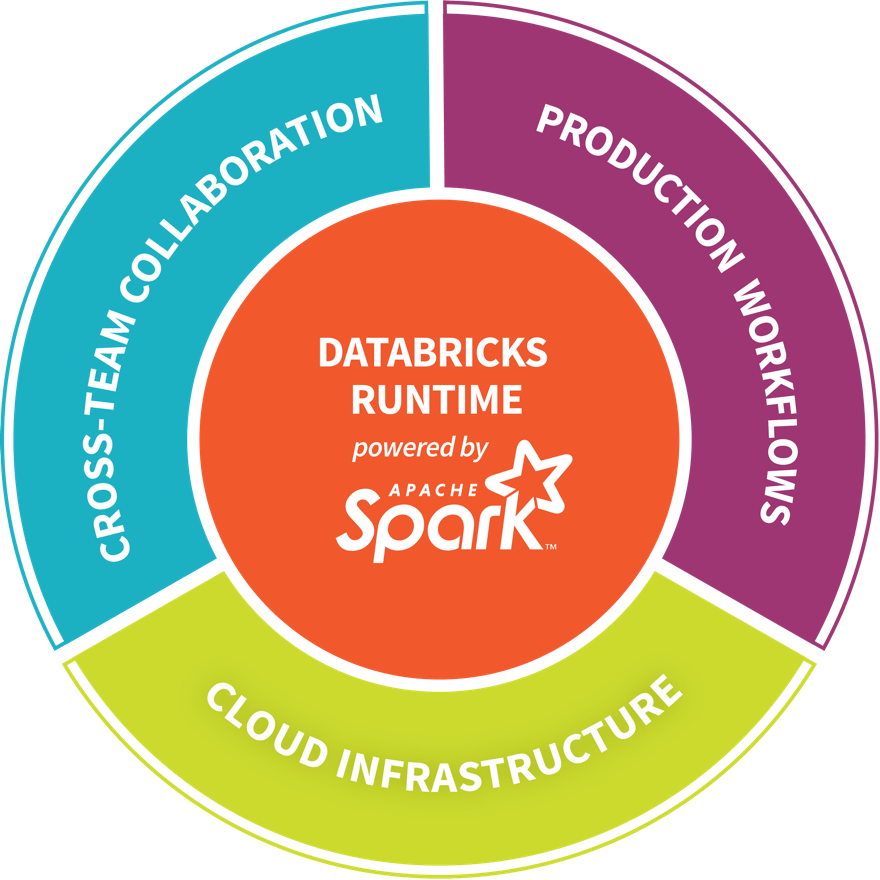

I’m a Software Engineer at Databricks working on Apache Spark’s Structured Streaming team. Besides contributing to this project, I’m also part of the team that builds internal streaming ETL pipelines at scale for our production systems. In other words, what we contribute to Apache Spark’s Structured Streaming, we use it at scale at Databricks.

How did you get involved with on Apache Spark’s Structured Streaming?

I was in the Data Engineering team at Databricks working on building our centralized data pipeline, which processes TBs of data collected from Databricks services every day. Most companies build the very similar data pipelines. Building our pipeline wasn’t easy, and I’ve heard from other companies during talks on how hard it is to build these pipelines. We were then discussing how we could be simplifying these kinds of workloads, and Structured Streaming came out!

What Spark packages do you maintain and what do they do or provide?

I originally maintain the website Spark Packages. Our goal with Spark Packages was to maintain a package index for all the cool things users were building on top of Spark. Spark has a huge ecosystem around it. People connect to a plethora of data sources, write applications in python, r, scala, java, deploy Spark on different cloud providers such as AWS, Azure, GCE, or on containers like Docker. There’s just so many cool integrations developers have built for Spark, and we wanted to provide them a central place where they can showcase these integrations and other people can find these and use them.

I’ve written a couple packages for fun such as lazy-linalg, which provides matrix algebra APIs on Spark with comparable performance to specialized C++ code for certain operations on the JVM.

What will you be talking about at ScyllaDB Summit 2017?

I will be talking about performing stateful aggregations on streaming data with Structured Streaming.

What type of audience will be interested in your talk?

Data engineers / Data Scientists / Solution Architects / System Integrators. Anyone who has to work with the processing, cleaning, enrichment, and reporting of data.

Can you please tell me more about your talk?

When working with streaming data, stateful operations are a common use case. If you would like to perform data de-duplication, calculate aggregations over event-time windows, track user activity over sessions, you are performing a stateful operation.

Apache Spark provides users with a high level, simple to use DataFrame/Dataset API to work with both batch and streaming data. The funny thing about batch workloads is that people tend to run these batch workloads over and over again. Structured Streaming allows users to run these same workloads, with the exact same business logic in a streaming fashion, helping users answer questions at lower latencies.

In this talk, we will focus on stateful operations with Structured Streaming and we will demonstrate through live demos, how NoSQL stores can be plugged in as a fault tolerant state store to store intermediate state, as well as used as a streaming sink, where the output data can be stored indefinitely for downstream applications.

Can you please tell me more about what a streaming sink does and how it can benefit applications?

In a Structured Streaming application, you connect to one or more sources (files on a blob store, Apache Kafka, Amazon Kinesis), perform your business logic, and then output the data to a Sink. Basically, a streaming sink is where data gets written out to. The sink could be a blob store like S3, HDFS, it could be a database, or it could even be the console. For example, a NoSQL data store can be used as a Streaming Sink that powers a web page.

Imagine a use case where a user makes a purchase of a product. Once the user purchases this product, you would like to provide them recommendations on what else to buy. You may update your recommendations to a customer based on most recent purchases in a Structured Streaming application. This application can then save the updated set of recommendations to a NoSQL store, and your website may provide these recommendations when the user returns to your website or simply opens another page. Being able to do these in a low latency setting is really important, and Spark provides an end-to-end platform for both training your Machine Learning models, as well as using these models in a streaming setting.

Where can we learn more information about your talk?

The Databricks blog has a great set of posts around Structured Streaming:

How can the people get in touch with you?

You can find me on LinkedIn.

Thank you very much, Burak. We can not wait to see your talk in person and learn more. If you want to attend ScyllaDB Summit 2017 and enjoy more talks like this one.

ScyllaDB Summit is taking place in San Francisco, CA on October 24-25. Check out the full agenda on our website to learn about the rest of the talks—including technical talks from the ScyllaDB team, the ScyllaDB roadmap, and a hands-on workshop where you’ll learn how to get the most out of your ScyllaDB cluster.