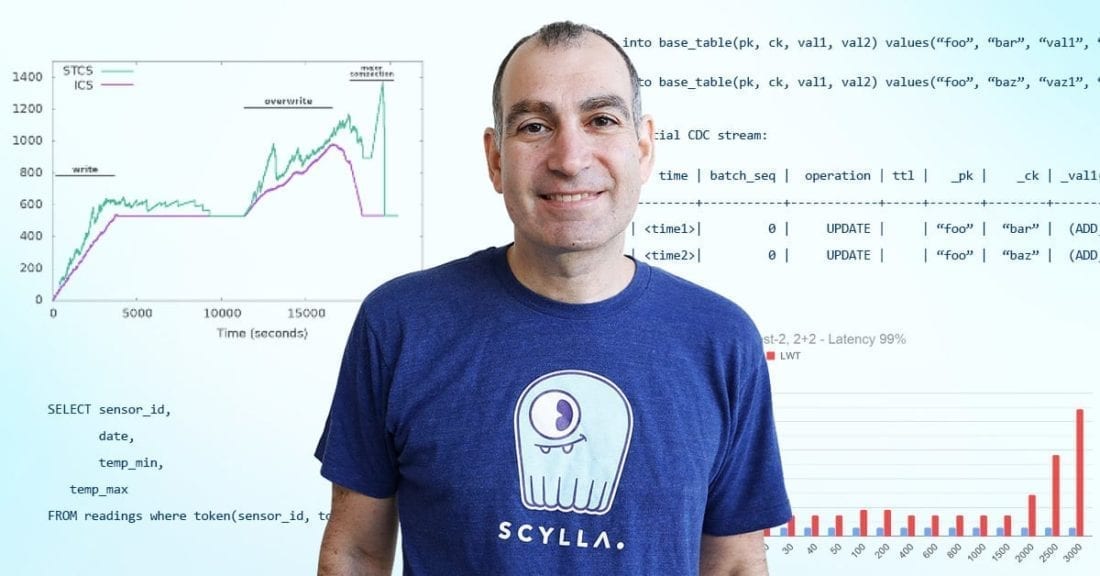

Recently we hosted the first of our new series of AMA sessions with ScyllaDB CTO Avi Kivity. Join our Meetup to be notified of the next AMA.

Here’s how our first AMA went:

Q: Would you implement ScyllaDB in Go, Rust or Javascript if you could?

Avi: Good question. I wouldn’t implement ScyllaDB in Javascript. It’s not really a high-performance language, but I will note that Node.js and Seastar share many characteristics. Both are using a reactor pattern and designed for high concurrency. Of course the performance is going to be very different between the two, but writing code for Node.js and writing code for Seastar is quite similar.

Go also has an interesting take on concurrency. I still wouldn’t use it for something like ScyllaDB. It is a garbage-collected language so you lose a lot of predictability, and you lose some performance. The concurrency model is great. The language lacks generics. I like generics a lot and I think they are required for complex software. I also hear that Go is getting generics in the next iteration. Go is actually quite close to being useful for writing a high-performance database. It still has the downside of having a garbage collector, so from that point-of-view I wouldn’t pick it.

If you are familiar with how ScyllaDB uses the direct I/O and asynchronous I/O, this is not something that Go is great at right now. I imagine that it will evolve. So I wouldn’t pick Javascript or Go.

However, the other language you mentioned, Rust, does have all of the correct characteristics that ScyllaDB requires. Precise control over what happens. It doesn’t have a garbage collector so it means that you have predictability over how much time your things take, like allocation. You don’t have pause times. And it is a well-designed language. I think it is better than C++ which we are currently using. So if we were starting at this point in time, I would take a hard look at Rust, and I imagine that we would pick it instead of C++. Of course, when we started Rust didn’t have the maturity that it has now, but it has progressed a long time since then and I’m following it with great interest. I think it’s a well-done language.

Q: Can I write in a plugin to extend ScyllaDB?

Avi: Right now you cannot. We do have support for Lua, which can be used to write plugins, but we did not yet prepare any extension points that you can actually use. You can only use them to write User Defined Functions (UDFs) for now. But we do have Lua as infrastructure for plugins. So if you have an idea for something you would need a plugin for, just file an issue or write to the users mailing list and we can see if it’s something that will be useful for many users. Then we can consider writing this extension point. And then you’ll be able to write your extensions with our extension language, Lua.

Q: What did you take from the KVM/OSv projects to use in ScyllaDB?

Avi: KVM and OSv taught us how to talk directly to the hardware and how to be mindful of every cycle; how to drive the efficiency. KVM was designed for use on large machines so we had to be very careful when architecting it for multi-core. And the core counts have only increased since then. So it taught us how to both deal with large SMP [Symmetric Multiprocessor] systems and also how to deal with highly asynchronous systems.

OSv is basically an extension of that so we used a lot of our learnings from KVM to write OSv in the same way, dealing with highly parallel systems and trying to do everything asynchronously. So that the system does not block on locks and instead just switches to do something else. These are all ideas that we used in ScyllaDB. More in Seastar than ScyllaDB. Seastar is the underlying engine.

There is also the other aspect, which is working on a large scale open source project. We are doing the same thing here with a very widely dispersed team of developers. I think our developers come from around fifteen countries. This is something that we’re used to from Linux, and it is a great advantage for us.

Q: How does the ScyllaDB client driver target a specific shard on a target host node? Does it pick TCP source port to match target node NIC RSS [receive-side scaling] setting?

Avi: Actually we have an ongoing discussion about how to do that. The scheme that we currently use is a little bit awkward. So what the client does is it first negotiates the ability to connect with a particular shard. Just make sure it’s connecting to a server that has this capability. Then it just opens a bunch of connections which arrive at a target shard more or less randomly. And then it discards all of the connections that it has in excess. If it sounds like a very inefficient way to do things, then that’s the case. But it was the simplest for us and it did not depend on anything. It didn’t need to depend on the source ports being unchanged.

Because it is awkward we are now considering another iteration on that, depending on the source port. The advantage of that is it allows you to open exactly the number of connections you need. You don’t have to open excess connections and then discard them, which is wasteful. But there are some challenges. One of the challenges is that perhaps not all languages may allow you to select the source port. And if so, they wouldn’t be able to take advantage of that mechanism and will have to fall back on the older mechanism. The other possible problem is that network address translation [NAT] between the client and the server may alter the source port. If that happens it stops working, so we will have to make it optional for users that are behind network address translation devices.

I think the question also mentioned the hash computed by the NIC, and we’re not doing that. It is too hard to coordinate. Different NICs have different hash functions. Right now we have basically a random connection to a shard, then discarding the access connection. We’re thinking of adding on top of that using the source port for directing a connection to a specific shard.

Q: Any plans for releasing ScyllaDB on Azure and/or GCP?

Avi: First, you can install ScyllaDB on Azure or on GCP. We don’t provide images right now so it’s a little bit more work. You have to select an OS image and then follow the installation procedure. Just as you would for on-prem. So it’s a little bit more work than is necessary.

We are working to provide images, which will save that work and you will just be able to select the ScyllaDB image from the marketplace then launch it and we’ll start working immediately.

The other half is ScyllaDB Cloud. We are working on GCP and Azure but think that GCP is the one next in line. I don’t have the timelines but we’re trying to do it as quickly as possible so that people can use ScyllaDB. Especially people who are migrating away from Amazon and want a replacement for Dynamo. They can find it in ScyllaDB running on GCP.

Q: Do you plan at some point in the future to support an Alternator [ScyllaDB’s DynamoDB-compatible API] that includes the feature of DynamoDB Transactions?

Yes. So DynamoDB has two kinds of transactions. The first kind is a transaction that is within a single item. That is already supported. It is implemented on top of our Lightweight Transactions [LWT] support that’s used for regular CQL operations.

There is another kind which is a multi-item transaction and that we do plan to support, but it is still in the future. It’s not short-term implementation work. What we are doing right now is implementing Raft. This is the kind of infrastructure for transactions. Then we will implement Alternator transactions on top of the Raft infrastructure.

Q: ScyllaDB and Seastar took the approach of dynamically allocating memory. Other HPC [High Performance Computing] products sometimes choose the approach of pooling objects and pre-allocating the object. Did you consider the pooling approach for ScyllaDB? Why did you choose this memory allocation approach?

Avi: Interesting question. Basically the approach of having object pools works when you have very, very regular workloads. In ScyllaDB the workload is very irregular. You can have a workload that has lots of reads with, I don’t know, 40 byte objects, or you can have a workload that one megabyte objects, and you can have simple workloads where you just do simple reads and writes, and you can have complex workloads that use indexes and indirect indexes and build materialized views. So the pattern of memory use varies widely between different workloads.

If you had a pool for everything then you would end up with a large number of pools most of which are not in use. The advantage of dynamic use of memory is that you can repurpose all of the memory. The memory doesn’t have a specific role attached to it. This is even dynamic. So the workload can start with one kind of use for memory and then shift to another kind of use. We don’t even have a static allocation between memtables and cache. So if you have a read-only workload, then all of the memory gets used for cache. But if you have a read-write workload then half of the memory gets used for memtables and half of the memory gets used for cache.

There is also working memory that use used to manage connections and provide working memory for requests in-flight, and that is also shared with the cache. So if you have a workload that uses a lot of connection and has high concurrency, then that memory is taken from the cache and is used to serve those requests. A workload that doesn’t have a high concurrency can, instead of having that memory sitting in a pool and doing nothing, that memory is part of the cache.

The advantage is that everything is dynamic and you can use more of your memory at any given time. Of course it results in a much more complex application because you have to keep track of all of the users of the memory, and make sure that you don’t use more than 100%. With pools it’s easy if you take your memory and you divide it among the pools. But if you have dynamic use you have to be very careful to avoid running out of memory.

Q: Is it true that ScyllaDB uses C++20 already?

Avi: Yes. We’re using C++20. We switched about a month ago now. The features I was really anxious to start using C++20 for is coroutines. Which really fits very well with the asynchronous I/O that we do everywhere. It promises to really simplify our code base but that feature is not ready. We’re waiting for some fixes in GCC; in the compiler. As soon as those fixes hit the compiler we will start using coroutines. The other C++20 features are already in use and we’re enjoying them.

Related articles:

Q: It’s being said that wide column storage is good for messenger-like applications. High frequency of small updates. If so, could you explain why ScyllaDB would work better than MongoDB for such workloads?

Avi: First I have to say that I think that all this, the labeling of wide column storage, is a misnomer. It comes back from the days, the early days of Apache Cassandra where it was schemaless. You would allocate a partition and you could push as many cells as you wanted into a partition without any schema and those would be termed a “wide column.” But these days both Cassandra and ScyllaDB don’t use this model. Instead you divide your partition into a large number of rows and each row has a moderate number of columns. So it’s not really accurate to refer to ScyllaDB as a wide column. Of course the name stuck and it’s impossible to make it go unstuck.

To go back to the question about whether it fits a messaging application, yes, we actually have Discord, a very famous messaging application using ScyllaDB. If it’s a good fit for them then it’s a good fit for any messaging application.

Comparing to Mongo, I don’t think the problem is the data model. It’s really about the scalability and the performance. If you have a messaging application that has a high throughput, if you’re successful and you have a high number of users, then you need to use something like ScyllaDB in order to scale. If you have a small number of users and you don’t have high performance, then anything will work. It really depends on whether you have high scales or not.

Q: Can you compare ScyllaDB to Aerospike? Do they optimize for the same use cases at all?

Avi: Yes. Though in some respects Aerospike is similar to ScyllaDB I would say that the main differences are that Aerospike is partially in-memory. So Aerospike has to keep all of the keys in memory and ScyllaDB does not. That allows ScyllaDB to address much larger storage. If you have to keep all of the keys in memory then even if you have a lot of disk space as soon as you exhaust the memory for the keys then you need to start adding nodes. Whereas with ScyllaDB we support a disk-to-memory ratio of 100 to 1.

So if you have 128 gigs of RAM then you can have 10 terabytes of disk. There are, of course, servers with more than 128 gigs of RAM, so we do have users who are running with dozens of terabytes of disk. I think this is something that Aerospike does not do well. I’m not an expert in Aerospike, so don’t take me up on that. My understanding is this is a point where ScyllaDB would perform much better by allowing this workload to be supported.

If you have a smaller amount of data then I think they are similar, though I think we have a much wider range of features. We support more operations than Aerospike. Better cross datacenter replication. But again, I’m not really an expert in Aerospike so I can’t say a lot about that.

Q: Does ScyllaDB also support persistent memory? Any performance comparisons with regular RAM or maybe PMEM as cache? Or future work planned?

Avi: We did do some benchmarks with persistent memory. I think it was a couple of years ago. You can probably find them on our blog. We did that with Intel disks. We currently don’t have anything planned with persistent memory, and the reason is it’s not widely available. You can’t find it easily. Of course you can buy it if you want, but you can’t just go to a cloud and select an instance with persistent memory.

When it becomes widely available then sure it makes a lot of sense to use it as a cache expander. This way you can get even larger ratios of disk-to-memory and so improve the resource utilization. It is very interesting for us but it doesn’t have enough presence in the market yet to make it worth our while to start optimizing for it.

Read more:

Q: What’s the status of Clang MSVC compliance? Is that something you’re on?

Avi: ScyllaDB does compile with Clang. We have a developer that keeps testing it and sends patches every time we drift away from Clang. Our main compiler is GCC, but we do compile with Clang.

Recently, because of some gaps in Clang’s C++20 support we drifted a bit away, but I imagine as soon as Clang comes back into compliance then we’ll be able to build with Clang again.

MSVC is pretty far away. And the reason here is not the compiler but the ecosystems. We’re heavily tied into Linux. I imagine we could port Seastar to Windows but there really isn’t any demand for running ScyllaDB and other Seastar applications on Windows. Linux really is the performance king for such applications. So this is where we are focusing.

It could be done, so if you’re interested, you could look at porting Seastar to Windows. But I really don’t know in terms of compiler compliance whether MSVC is there or not. I hear that they’re getting better lately but I don’t have any first hand knowledge. [Editor’s note: users can read more about Clang and MSVC compliance here.]

Q: Does ScyllaDB support running on AWS Graviton2 instances? If so, what’s your insights and experience on performance comparisons with instances with AMD/Intel instances specifically for a ScyllaDB database workload?

Avi: Interesting question! So we did test, we did run benchmarks on Arm systems, not just Graviton but on Arm systems. ScyllaDB builds and runs on those Arm systems. The Graviton2 systems that Amazon is providing are not really suitable for ScyllaDB because they don’t have a fast NVMe storage. And of course, we need a lot of very fast storage. So you could run ScyllaDB on EBS, Elastic Block Store, but that’s not really a good performance point for ScyllaDB. ScyllaDB really wants fast local storage.

When we get a Graviton-based instance that fast NVMe’s, then I’m sure that ScyllaDB will be a really good fit for it, and we will support it. We do compile on Arm and work on Arm without any problems. As to whether it will work well? It will. The shared-nothing architecture of ScyllaDB works really well with the Arm relaxed memory model. It really gives the CPU the maximum flexibility in how it issues the read and write request memory, compared to other applications that use locking.

We do expect to get really good performance on Arm, and we did on the benchmarks that we performed in the past. I think they are on our website as well. So as soon as there are Arm-based instances with fast storage, then I expect we will provide AMIs and you will be able to use them. Even now you can compile it on your own for Arm and it’s supposed to just work.

Learn more:

Q: Does every memtable have a separate commitlog? I assume yes. If so, how are these commitlogs spread among the available disks?

Avi: Okay. So your assumption is incorrect. We have a shared commitlog for all of the memtables within a single shard, or a single core. The reason for that is to consolidate the writes. If you have a workload that writes to many tables then instead of having to write to many files we just intersperse the writes to all of those memtables need to write for a single file which is more efficient. But we do have a separate commitlog for every shard, or for every core.

This is part of the shared-nothing architecture which tries to avoid any sharing of data or control between cores. Generally the number of active commitlogs is equal to the number of cores on the system. We keep cycling between them. There is one active segment. As soon as it gets filled we open a new active segment and start writing to that.

Q: I have a follow-up question to the commitlog question: If there is only a single commitlog for multiple memtables, won’t it cause, for interleaved workloads for example, for a lot of writes happen to only one memtable then a single write to a different memtable, won’t it cause it to flush both memtables? How do you process this internally with the entries for different memtables within a single commitlog?

Avi: It’s a good point, though it can cause early flushes of memtables, but the way we handle it is simply by being very generous with the allocation of space to commitlog. Our commitlog can be as large as the memory size and our memtable is limited to half the memory size. So in practice the memtables flush because they hit their own limits before you hit this kind of forced flushing.

In theory it is possible to get forced flushing but in practice it’s never been a problem just by being generous with disk space. It’s easy to be generous with your user’s disk space, but really, when you usually have disk space that is several dozen times larger than memory you’re just sacrificing a few percent of your disk for a commitlog.

Q: So the answer is basically that you hopefully never reach the situation that you actually run out of space for the commitlog to flush all the entries, and you hope that until you get to that point then you will flush all the previous contiguous memtables anyway. Right?

Avi: Yes, and that’s what happens in practice because the commitlog is larger than memory. Then some memtable reaches its limit and starts flushing. It is possible for what you describe to happen, but it is not so bad. It’s okay. You have a memtable that is flushed a little bit earlier than it otherwise would be. It means that there will be a little bit more compaction later. But in practice it’s never been a cause of a problem.

Q: What is ScyllaDB’s approach to debugging issues from the field? Do you rely on logs? On traces? How about issues that you cannot recreate on your machines, that come from clients?

Avi: First of all, it’s hard. There is no magic answer for that. We have a tracing facility that can be enabled dynamically. All of the interesting code path are tagged with a kind of dynamic tracing system. You can select a particular request for tracing and then all of the code paths that this request touches log some information. That is stored in a ScyllaDB table. Then you can use that to understand what went wrong.

There is also a regular log with variable levels which is pretty standard. This has limited usefulness because you cannot just open the log for debug mode because if you have a production application that is generating a large number of requests it will quickly spam the log and it will also impact the running requests. So there’s no magic answer. Sometimes tracing works.

What we do go to is the metrics. The metrics don’t give you any direct information about a particular request but they do give you a very good overall picture about what the system is doing. The state of various queues. Where memory is allocated. The rate at which various subsystems are operating.

There’s a lot more than just metrics that we expose in the dashboard. If you go to Prometheus then you have access to — I think it’s now in the thousands — thousands of metrics that describe different parts of the system. We use those to gain an understanding of what the customer system is doing. From that we can try to drill down. Whether we are tracing, or maybe by asking the customer to do different things.

In extreme cases where we are not able to make progress we will create a debug build with special instrumentation. But of course we try to avoid doing that. That’s an expensive way to debug. We prefer having enough debug information in advance present in the tracing system and in the metrics.

Q: How do you debug Seastar applications of this size? I considered Seastar for my pet project but it looks virtually undebuggable.

Avi: It’s not undebuggable. It does have a learning curve. It takes time to learn how to debug it. Once you do, then you need some experience and expertise. We rely a lot on the sanitizers that are part of the compiler. If you run the application in debug mode and it finds memory bugs with high precision. It also pinpoints the problem. So it’s easy to resolve and we are adding debug tools. We have error injection tools in ScyllaDB and also in Seastar. We use those to try flush out bugs before they hit users and customers.

Next Steps

Our developers are very responsive. If you have more questions, here’s a few ways to get in touch.

- First is on our Slack channel.

- You can also get your questions answered via our ScyllaDB users mailing list.

- Sign up for one of our Virtual Workshops for a hands-online session with a ScyllaDB Solution Architect.

- Plus, to attend similar virtual events in the future, make sure you sign up for the ScyllaDB meetup on Meetup.com.