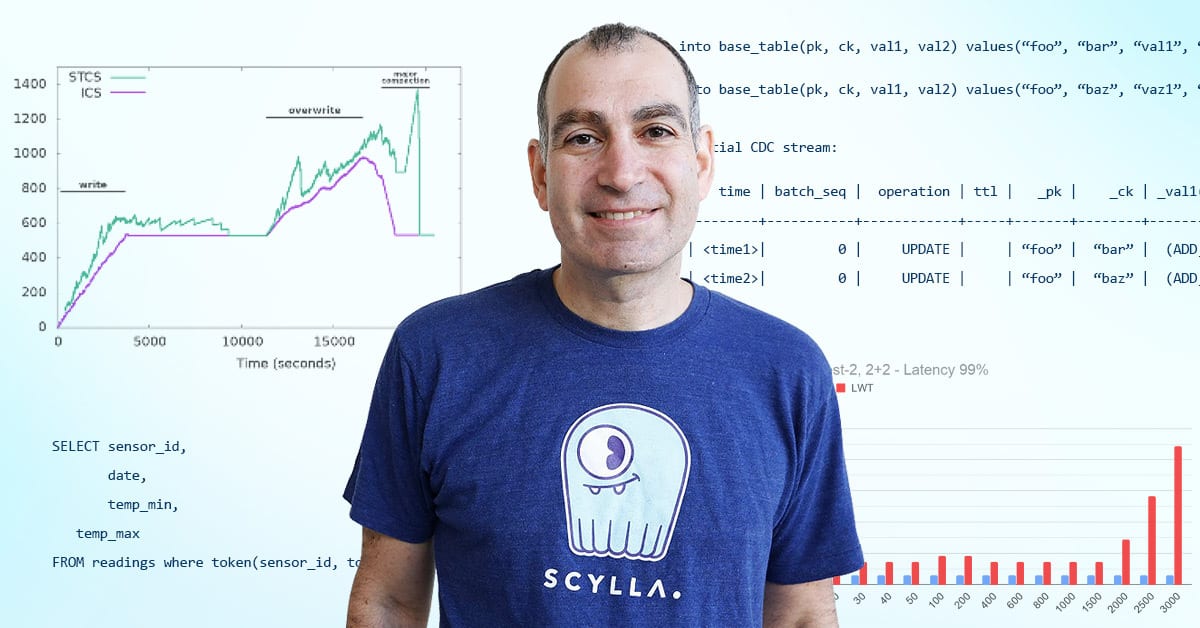

Understanding what goes on inside a fast NVMe SSD is key for maximizing its performance, especially with IO-heavy workloads. But how do you peer into this traditionally black box?

That’s what ScyllaDB CTO and co-founder Avi Kivity recently solved for. Specifically, he wanted a new level of insight into what ScyllaDB, a NoSQL database with a unique close-to-the-hardware architecture, looked like from the perspective of the NVMe-based Nitro SSDs featured in new AWS EC2 I4i instances.

Avi set out to visualize this and shared his approach in a P99 CONF keynote.

With Avi as your guide, watch real-time visualizations of IO: a dynamic display of color-coded reads and writes, random IO, sequential IO, and the disk bandwidth consumed by each. After explaining how he created a window into the disk, he narrates and interprets a real-time visualization, including:

- How ScyllaDB’s shard-per-core architecture is reflected in disk behavior

- How sequential SSTable writes differ from commitlog writes

- How compaction ramps up and down in response to read and write workloads

- How the database works with the disk to avoid lock contention

Next, Avi explores a different perspective: how the same database operations manifest themselves in monitoring dashboards. Metric by metric, he sheds light on the database internals behind each graph’s interesting trends.

Experience this fascinating journey in system observability – and learn how you can gain this level of insight into your own IO.

A Full Lineup of Low-Latency Engineering Feats at P99 CONF

If you’re enticed by engineering feats like this, we welcome you to binge watch the low-latency engineering talks from P99 CONF. Here’s a quick taste:

Analyze Virtual Machine Overhead Compared to Bare Metal with Tracing

Steven Rostedt -Software Engineer, Google

Running a virtual machine will obviously add some overhead over running on bare metal. This is expected. But there are some cases where the overhead is much higher than expected. This talk discusses using tracing to analyze this overhead from a Linux host running KVM. Ideally, the guest would also be running Linux to get a more detailed explanation of the events, but analysis can still be done when the guest is something else.

Why User-Mode Threads Are Often the Right Answer

Ron Pressler – Project Loom Technical Lead, Java Platform Group, Oracle

Concurrency is the problem of scheduling simultaneous, largely-independent tasks, competing for resources in order to increase application throughput. Multiple approaches to scalable concurrency, which is so important for high-throughput servers, are used in various programming languages: using OS threads, asynchronous programming styles (“reactive”), syntactic stackless coroutines (async/await), and user-mode threads. This talk will explore the problem, explain why Java has chosen user-mode threads to tackle it, and compare the various approaches and the tradeoffs they entail. We will also cover the main source of the performance of user-mode threads and asynchronous I/O, which is so often misunderstood (it’s not about context-switching).

Overcoming Variable Payloads to Optimize for Performance

Armin Ronacher – Creator of Flask; Architect, Sentry.io

When you have a significant amount of events coming in from individual customers, but do not want to spend the majority of your time on latency issues, how do you optimize for performance? This becomes increasingly difficult when you are dealing with payload sizes that are multiple orders of magnitude difference, have complex data that impacts processing, and the stream of data is impossible to predict. In this session, you’ll hear from Armin Ronacher, Principal Architect at Sentry and creator of the Flask web framework for Python on how to build ingestion and processing pipelines to accommodate for complex events, helping to ensure your teams are reaching a throughput of hundreds of thousands of events per second.

Keeping Latency Low for User-Defined Functions with WebAssembly

Piotr Sarna – Principal Software Engineer, ScyllaDB

WebAssembly (WASM) is a great choice for user-defined functions, due to the fact that it was designed to be easily embeddable, with a focus on security and speed. Still, executing functions provided by users should not cause latency spikes – it’s important for individual database clusters and absolutely crucial for multi-tenancy. In order to keep latency low, one can utilize a WebAssembly runtime with async support. One such runtime is Wasmtime, a Rust project perfectly capable of running WebAssembly functions cooperatively and asynchronously. This talk briefly describes WebAssembly and Wasmtime, and shows how to integrate them into a C++ project in a latency-friendly manner, while implementing the core runtime for user-defined functions in async Rust.