A new blog series on how to avoid common mistakes with ScyllaDB. First up: selecting a suitable infrastructure.

If you have use cases that require high throughput and predictable low latency, there is a good chance that you will eventually stumble across ScyllaDB (and – hopefully – that you landed here!) whether you come from another NoSQL database or are exploring alternatives to your existing relational model. No matter which solution you end up choosing, there is always a learning curve, and chances are that you may stumble upon some roadblocks which could have been avoided.

Although it is very easy to get up to speed with ScyllaDB, there are important considerations and practices to follow before getting started. Fairly often, we see users coming from a different database using the very same practices they learned in that domain – only to find out later that what worked well with their previous database might inhibit their success with another.

ScyllaDB strives to provide the most seamless user experience when using our “monstrously fast and scalable” database. Under the hood, we employ numerous advancements to help you achieve unparalleled performance – for example, our state-of-the-art I/O scheduler, self-tuning scheduling groups, and our close-to-the-metal shared-nothing approach. And to benefit from all the unique technology that ScyllaDB has to offer, there are some considerations to keep in mind.

Despite all the benefits that ScyllaDB has to offer, it is fundamentally “just” a piece of software (more precisely, a distributed database). And, as with all complex software, it’s possible to take it down paths where it won’t deliver the expected results. That’s why we offer extensive documentation on how to get started. But we still see many users stumbling at critical points in the getting started process. So, we decided to create this blog series sharing very direct and practical advice for navigating key decisions and tradeoffs and explaining why certain moves are problematic.

This blog series is written by field individuals who have spent years helping thousands of users – just like you – daily and seeing the same mistakes being made over and over again. Some of these mistakes might cause issues immediately, while others may come back to haunt you at some point in the future. It’s not a full-blown guide on ScyllaDB troubleshooting (that would be another full-fledged documentation project). Rather, it aims to make your landing painless so that you can get the most out of ScyllaDB and start off on a solid foundation that’s performant as well as resilient.

We’ll be releasing new blogs in this series (at least) monthly, then compile the complete set into a centralized guide. If you want to get up to speed FAST, you might also want to look at these tips and tricks.

What are you hoping to get out of ScyllaDB?

Mistake: Assuming that one size fits all

Before we dive into considerations, recommendations, and mistakes, it’s helpful to take a step back and try to answer a simple question: What are you hoping to get out of ScyllaDB? Let’s unravel the deep implications of such a simple question.

Fundamentally, a database is a very boring computer program. Its purpose is to accept input, persist your data, and (whenever necessary) return an output with the requested data you’re looking for. Although its end goal is simple, what makes a database really complex is how it will fit your specific requirements.

Some use cases prioritize extremely low read latencies, while others focus on ingesting very large datasets as fast as possible. The “typical” ScyllaDB use cases land somewhere between these two. To give you an idea of what’s feasible, here are a few extreme examples:

- A multinational cybersecurity company manages thousands of ScyllaDB clusters with many nodes, each with petabytes of data and very high throughput.

- A top travel site is constantly hitting millions of operations per second.

- A leading review site requires AGGRESSIVELY low (1~3ms) p9999 latencies for their ad business.

However, ultra-low latencies and high throughput are not the only reason why individuals decide to try out ScyllaDB. Perhaps:

- Your company has decided to engage in a multi-cloud strategy, so you require a cloud-agnostic / vendor-neutral database that scales across your tenants.

- Your application simply has a need to retain and serve Terabytes or even Petabytes of data.

- You are starting a new small greenfield project that does not yet operate at “large scale,” but you want to be proactive and ensure that it will always be highly available and that it will scale along with your business.

- Automation, orchestration, and integration with Kubernetes, Terraform, Ansible, (you name it) are the most important aspects for you right now.

These aren’t theoretical examples. They are all based on real-world use cases of organizations using ScyllaDB in production. We’re not going to name names, but we’ve recently worked closely with:

- An AdTech company using ScyllaDB Alternator (our DynamoDB-compatible API) to move their massive set of products and services from AWS to GCP.

- A blockchain development platform company using ScyllaDB to store and serve Terabytes of blockchain transactions on their on-premises facilities.

- A company that provides AI-driven maintenance for industrial equipment; they anticipated future growth and wanted to start with a small but scalable solution.

- A risk scoring information security company using ScyllaDB because of how it integrates with their existing automations (Terraform and Ansible build pipeline).

Just as there are many different reasons for considering ScyllaDB, there is no single formula or configuration that will fit everyone. The better you understand your use case needs, the easier your infrastructure selection path is going to be. That being said, we do have some important recommendations to guide you before you begin your journey with us.

Infrastructure Selection

Selecting the right infrastructure is complex, especially when you try to define it broadly. After all, ScyllaDB runs on a variety of different environments, CPU architectures, and even across different platforms.

Deciding where to run ScyllaDB

Mistake: Departing from the official recommendations without a compelling reason and awareness of the potential impacts

As a quick reference, we recommend running ScyllaDB on storage-optimized instances with local disks, such as (but not limited to) AWS i4i and i3en, GCP N2 and N2D with local SSDs, and Azure Lsv3 and Lasv3-series. These are well-balanced instance types with production-grade CPU:Memory:Disks ratios.

Note that ScyllaDB’s recommendations are not hard requirements. Many users have been able to meet their specific expectations while running ScyllaDB beyond our official recommendations (e.g., on network-attached or hard drive disks, within their favorite container orchestration solution of choice, with 1G of memory, under slow and unreliable network links…). The possibilities are endless.

Mistake: Starting with too much, too soon

ScyllaDB is very flexible. It will run on almost any relatively recent hardware (in containers, in your laptop, in your Raspberry Pi) and it supports any topology you require. We encourage you to try ScyllaDB in a small under-provisioned environment before you take your workload on to production. When the time comes and you are comfortable moving to the next stage, be sure to consider our deployment guidelines. This will not only improve your performance, but also save you frustration, wasted time, and wasted money.

Planning your cluster topology

Mistake: Putting all your eggs in a single basket

We recommend that you start with at least 3 nodes per region, and that your nodes have a homogeneous configuration. The reason for 3 nodes is fairly simple: It is the minimum number required in order to provide a quorum and to ensure that your workload will continuously run without any potential data loss in the event of a single node failure.

The recommendation of a homogeneous configuration requires a bit more of an explanation. ScyllaDB is a distributed database where all nodes are equal. There is no concept of primary, nor standby. Therefore, ScyllaDB nodes are leaderless. An interesting aspect of a distributed system is the fact that it will always run as fast as its slowest replica. Having a heterogeneous cluster may easily introduce cluster-wide contention if you happen to overwhelm your “less capable” replicas.

Your node placement is equally important. After all, no one wants to be woken up in the middle of the night because your highly available database became totally inaccessible due to something simple (e.g., a power outage occurred, a network link went down). When planning your topology, ensure that your nodes are colocated in a way that ensures a quorum during a potential infrastructure outage. For example, for a 3 node cluster, you will want each node to be placed in a separate availability zone. For a 6 node cluster, you will want to keep the same 3 availability zones, and ensure that you have 2 nodes in each.

Planning for the inevitable failure

Mistake: Hoping for the best instead of planning for the worst

Infrastructure fails, and there is nothing that our loved database – or any database – can do to prevent external failures from happening. It is simply out of our control. However, you DO have control over how much the inevitable failure will impact your workload.

For example, a single node failure in a 3 node cluster means that you have temporarily lost around 33.5% of your processing power, which may or may not be acceptable for your use case. That same single failure in a 6 node cluster would mean roughly a 17% compute loss.

While having many smaller nodes spreads out compute and data distribution, it also increases the probability of hitting infrastructure failures. The key here is to ensure that you keep the right balance: You might not want to rely solely on a few nodes, nor on too many.

Keeping it balanced as you scale out

Mistake: Topology imbalances

When you feel it is time to scale out your topology, ensure that you do so in increments that are a multiple of 3. Otherwise, you will introduce a scenario known as imbalance into your cluster. An imbalance is essentially a situation where some nodes of your cluster take more requests and own more data than others and thus become heavily loaded. Remember the homogeneous recommendation? Yes, it also applies to the way you place your instances. We will explain more about how this situation may happen when we start talking about replication strategies in a later blog.

On-prem considerations

Mistake: Resource sharing

Although the vast majority of ScyllaDB deployments are on the cloud (and if that’s your case, take a look at ScyllaDB Cloud), we understand that many organizations may need to deploy it inside their own on-premise facilities. If that’s your case – and high throughput and low latencies are a key concern – be aware that you want to avoid noisy neighbors by all means. Similarly, you want to avoid overcommitting compute resources, such as CPU and memory, with other guests. Instead, ensure that you dedicate these to your database from your hypervisor’s perspective.

Infrastructure specifics

All right! Now that we understand the basics, let’s discuss some specifics, including numbers, for core infrastructure components – starting with the CPU.

CPUs

Mistake: Per core throughput is static

As we explained in our benchmarking best practices and in our sizing article, we estimate a single physical core to deliver around 12,500 operations per second after replication, considering a payload of around 1KB. The actual number you will see depends on a variety of factors, such as the CPU make and model, the underlying storage bandwidth and IOPS, the application access patterns and concurrency, the expected latency goals, and the data distribution – among others.

Note that we said a single physical core. If you are deploying ScyllaDB in the cloud, then you probably know that vCPU (virtual CPU) terminology is used when describing instance types. ARM instances – such as the AWS Graviton2 – define a single vCPU as a physical core since there is no concept of Simultaneous Multithreading (SMT) for this processor family. Conversely, on Intel-based instances, a vCPU is a single physical core thread. Therefore, a single physical core on SMT-enabled processors is equivalent to 2 vCPUs in most cloud environments.

Also note the after replication mention. With a distributed database, you expect that your data is highly available and durable via replication. This means that every write operation that you issue against the database requires data replication to its peer replicas. As a result, depending on your replication settings, the effective throughput per core that you will be able to achieve from the client-side perspective (before replication) will vary.

Memory

Mistake: Being too conservative with memory

The memory allocated for ScyllaDB nodes is also important. In general, there is no such thing as “too much memory.” Here, the primary considerations are how much data you are planning to store per node and what your latency expectations are.

ScyllaDB implements its own specialized internal cache. That means that our database does not require complex memory tuning, and that we do not rely on the Linux kernel caching implementation. This allows us to have visibility into how many rows and partitions are being served from the cache, and which reads have to go to disk before being served. It also allows us to have fine-grained control over cache evictions, when and how flushes to disk should happen, prioritize some requests over others, and so on. As a result, the more memory allocated to the database, the larger ScyllaDB’s cache will be. This results in fewer round trips to disk, and thus lower latencies.

We recommend at least 2GB of memory per logical core, or 16GB in total (whichever number is higher). There are some important aspects around these numbers that you should be aware of.

As part of ScyllaDB’s shared-nothing architecture, we statically partition the process available memory to its respective CPUs. As a result, a virtual machine with 4 vCPUs and 16GB of memory will have a bit less than 4GB memory allocated per core. It is a bit less because ScyllaDB will always leave some memory for the Operating System to function. On top of that, ScyllaDB also shards its data per vCPU, which essentially means that – considering the same example – your node will have 4 shards, each one with roughly 4GB of memory.

Mistake: Ignoring the importance of swap

We recommend that you DO configure swap for your nodes. This is a very common oversight and we even have a FAQ entry for it. By default, ScyllaDB will mlock() when it starts, a system call to lock a specified memory range, preventing it from swapping. The swap space recommendation helps you avoid the system running out of memory.

Disk/RAM ratios

Mistake: Ignoring or miscalculating the disk/RAM ratio

Considering an evenly spread data distribution, you can safely store a ratio of up to 100:1 Disk/RAM. Using the previous example again, with 16GB of memory, you can store around ~1.6TB of data in that node. That means that every vCPU can handle about ~400GB of data.

It is important for you to understand the differences here. Even though you can store ~1.6TB node-wise, it does not mean that a single vCPU alone will be able to handle the same amount of data. Therefore, if your use case is susceptible to imbalances, you may want to take that into consideration when analyzing your vCPU, Memory, and Data Set sizes altogether.

But what happens if we get past that ratio? And why does that ratio even exist? Let’s start with the latter. The ratio exists because ScyllaDB needs to cache metadata in order to ensure that disk lookups are done efficiently. If the core’s memory ends up with no room to store these components, or if these components take a majority of the RAM space allocated for the core, then we get to the answer of the former question: Well, what will happen is that your node may not be able to allocate all the memory it needs, and strange (bad) things may start to happen.

In case you are wondering: Yes, we are currently investigating approaches to ease up on that limit. However, it is important that you understand the reasoning behind it.

One last consideration concerning memory: You do not necessarily want to live on the bleeding-edge. In fact, we recommend that you do not. The actual Disk/RAM ratio will highly depend on the nature of your use case. That is, if you require constant low p99 latencies and your use case frequently reads from the cache (hot data), then smaller ratios will benefit you the most. The higher your ratio gets, the less cache space you will have. We consider a 30:1 Disk/RAM ratio to be a good starting point.

Networking

Mistake: Overlooking the importance of networking

The larger your deployment gets, the more network-intensive it will be. As a rule of thumb, we recommend starting with a network speed of 10 Gbps or more. This is commonly available within most deployments nowadays.

Having enough networking bandwidth not only impacts application latency. It is also important for communication within the database. As a distributed database, ScyllaDB nodes require constant communication to propagate and exchange information, and to replicate data among its replicas. Moreover, maintenance activities such as replacing nodes, topology changes, and performing repairs and backups can become very network-intensive as these require a good amount of data transmission.

Here’s a real-life example. A MarTech company reported elevated latencies during their peak hours, even though their cluster resources had no apparent contention. Upon investigation, we realized that the ScyllaDB cluster was behind a 2 Gbps link, and that the amount of network traffic generated during their peak hours was enough for some nodes to max out their allocated bandwidth. As a result, the entire cluster’s speed hit a wall.

Avoid hitting a wall when it comes to networking, and be sure to also extend that recommendation to your application layer. If – and when – network contentions are introduced anywhere in your infrastructure, you’ll pay the price in the form of latency due to queueing.

What else?

We are not close to being done yet; there is still a long way to go!

You have probably noticed that we skipped one rather important infrastructure component: storage. This is likely the infrastructure component that most people will have trouble with. Storage plays a crucial role with regard to performance. In the next blog (Top Mistakes with ScyllaDB: Storage), we discuss disk types, best practices, common misconceptions, filesystem types, RAID setups, ScyllaDB tunable parameters, and what makes disks so special in the context of databases.

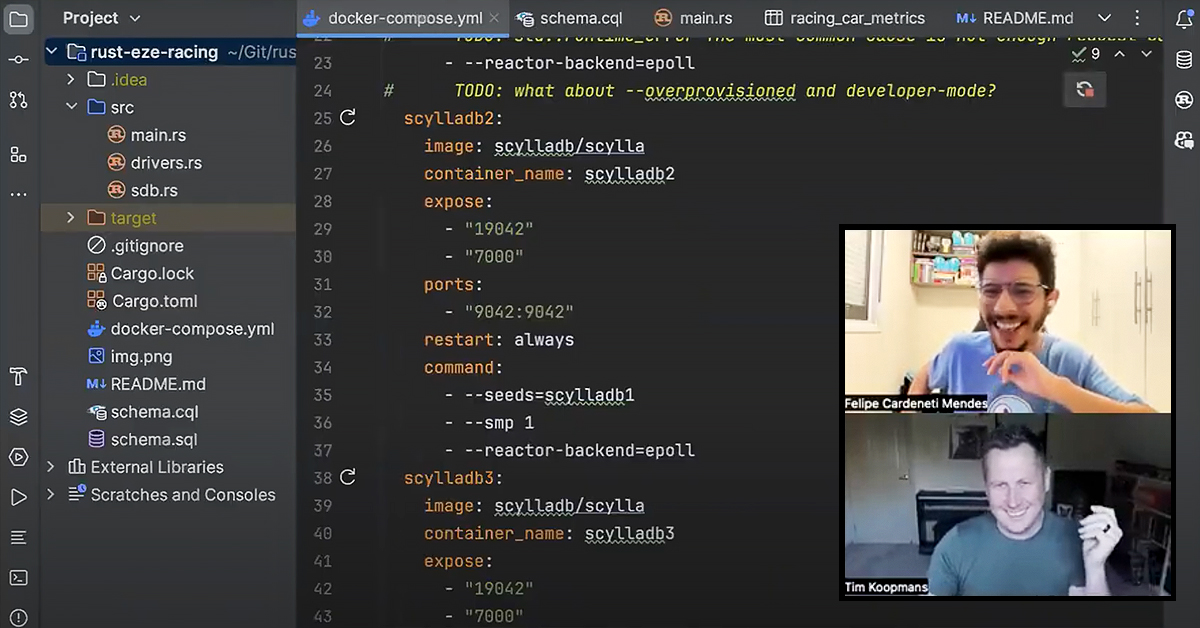

Pro Tip: Felipe shares top data modeling mistakes in our NoSQL Data Modeling Masterclass. , which is now available on demand.