All about disk types, best practices, common misconceptions, filesystem types, RAID setups, tunable parameters, and what makes disks so special in the context of databases.

Note: This is the second installment in our blog series designed to help users get the most out of ScyllaDB.

Read part 1: Intro + Infrastructure

Storage is perhaps the most controversial infrastructure component spanning deployments. Often we see users struggling with performance problems which eventually boil down to a mistake introduced by suboptimal disks. As persistent databases heavily rely on both disk IOPS and bandwidth for performance, failure to set realistic expectations on the storage you choose, combined with the lack of an understanding of how your database interacts with it, will dictate whether your deployment becomes successful – or a catastrophic failure.

When it comes down to disks, there’s a broad range of options to choose from: from cloud persistent drives to ultra-low latency NVMe SSDs. Each one has its own weaknesses and strengths that might help or hurt your workload.

We’re not here to compare and contrast every distinct storage medium ever created. The best storage medium for you ultimately depends on what level of performance your workload requires. Instead, let’s understand how ScyllaDB asynchronously orchestrates several competing tasks and how these tasks eventually get to dispatch I/O to your underlying disks. After, let’s cover some important storage-related performance tuning parameters, and drill down to important considerations you need to make in order to avoid mistakes when selecting this important (although often neglected) component: the disk.

Why you should care

As we discussed in the first part of this series, although the fundamental goal of a database is simple, its internal complexity lies in the fact that it needs to handle several actors competing for resources. Consider a scenario in which we have lost a node and we need to replace it. It is the responsibility of the database to provide data integrity according to your SLA terms, while internal background operations (such as compactions and memtable flushes) continue to have enough I/O capacity and, most importantly, that the user-facing workload continues to perform with zero perceivable impact.

When operating at scale, it is crucial to account for internal database operations and know how they may affect your workload. This fundamental understanding will not only help you select a storage backend; it will also help you promptly diagnose potential performance-related bottlenecks that may arise. Working with a suboptimal backend will likely introduce a growing backlog queue: a ticking time bomb waiting to undermine your latencies.

ScyllaDB employs several isolation mechanisms to prioritize your workload in the face of the many external actors outside your control. However, at the same time, the database can not entirely neglect the existence of background tasks; otherwise, it could place the overall system’s stability at risk. It is therefore important that you understand how ScyllaDB isolates and prioritizes access to system resources (CPU & disk), as well as understand the middle man among all involved actors competing for disk I/O: the I/O scheduler.

Performance isolation in ScyllaDB

You might have heard that ScyllaDB is capable of automatically self-tuning to the workload in question. Let’s understand how we accomplish this.

ScyllaDB is written on top of Seastar, an asynchronous C++ framework for high-performance applications. Simply put, we make use of Seastar’s isolation features known as Scheduling Groups for CPU and I/O scheduling. The latter is used by our I/O Scheduler to prioritize access to disk resources. This allows the database to know when the system has spare resources. With this knowledge, it knows when to speed up background tasks, as well as when to momentarily slow down its background tasks in order to prioritize the incoming user traffic.

Scheduling Groups have the following goals. First, to isolate the performance of components from each other. For example, we do not want compactions to starve the system; otherwise, the database may introduce throughput and latency variability down to your workload. Second, it allows the database to control each class separately. Back to our example, it may be that compactions fall behind for too long, creating a backlog of SSTables to compact. In that case, we have internal controllers which will increase the priority of the compaction class over time until its backlog gets back under control.

One mistake that users often make comes down to the assumption that either their resources are infinite, or that the database will stall background operations in favor of the application’s workload indefinitely. ScyllaDB will scale as much as your hardware can, and past that point you introduce system contention. As a result of that contention, the database will start making sacrifices and choose what to prioritize. If you continue to push the system to its limits, some background operations will pile up, and – since our controllers are designed to dynamically adjust each task priority to clear the backlog – your latencies will start suffering.

There are several Scheduling Groups that are beyond the scope of this write-up. However, in case you are wondering, yes – ScyllaDB’s Workload Prioritization feature is based on this same concept. It allows you to define distinct scheduling groups internally within the database, which it should follow in order to prioritize incoming requests.

I/O scheduling

Now that we understand how ScyllaDB achieves performance isolation, it makes sense that ScyllaDB implements its own I/O scheduler. While we want to keep the in-disk concurrency at a high-level to ensure we are fully utilizing its capacity, naively dispatching all incoming I/O requests down to the disks has a high probability of inflicting higher tail-latencies on your workload. Enter the I/O scheduler.

The role of ScyllaDB’s I/O scheduler is to avoid overwhelming the disk and, instead, queue (and prioritize) requests in the user space. Although the concept itself is fairly straightforward, its implementation is inherently complex, and we are still optimizing this process on a daily basis.

To understand how we have advanced over time, let’s quickly summarize the I/O scheduler generations:

- The first generation of our I/O scheduler simply allowed the user to specify the maximum useful disk request count before requests started to pile up on the storage layer. However, it did not take into consideration different request sizes, plus it did not differentiate between reads and writes.

- The second generation addressed the previous generation’s shortcomings and performed much better under read/write workloads with varying request sizes. However, it still did not account for mixed workloads and implemented static per-shard partitioning. The latter would equally split the disk capacity across all CPU shards. Therefore, specific shards in nodes with high core count or with imbalances could starve when the system wasn’t necessarily busy I/O wise.

- We finally get to the (current) third generation, which introduced cross-shard capacity sharing and token-bucket dispatching. This addressed the previous generation’s limitations and included more sophisticated self-throttling mechanics to prevent the disk from being overwhelmed with the amount of IO to handle and static bandwidth limiting for I/O classes. We’re continuing to improve and roll out additional features on top of this generation.

We have learned a lot after 6 years of I/O scheduling and, unsurprisingly, it became a notable Engineering effort to deliver a state-of-the-art user space modern solution. In addition to ensuring that your workload’s latencies are always in-check, it also provides nice insights into how ScyllaDB’s operations work from the disk perspective.

Getting the most out of the ScyllaDB I/O Scheduler

Scheduling Groups tuning

A variety of tuning parameters exist in ScyllaDB (scylla --help) as well as in Seastar (scylla --help-seastar) to modify the scheduler behavior. Let’s cover some important ones which you should be aware of. Be sure to check all available options thoroughly if you continue to face performance-related issues.

task-quota-ms

Defines the maximum time Seastar reactors will wait between polls for new tasks. Defaults to 0.5ms. Reducing this value will cause Seastar to poll more often, at the expense of more CPU cycles. If you have CPU capacity to spare and you believe your disks are not a bottleneck, lowering this value will allow Seastar to dispatch tasks at a faster pace.

num-io-groups

Splits the disk capacity in smaller CPU groups. By default, all CPUs in a single NUMA node join an IO group and share the capacity inside this group. For 2 or more NUMA nodes, the disk capacity is statically split into the number of NUMA nodes.

io-latency-goal-ms

Defines how long requests to disk should take. Defaults to 1.5 * task-quota-ms. The value should be greater than a single request’s latency, as it allows for more requests to be dispatched simultaneously. For spinning disks with an average latency of 10ms, increasing the latency goal to at least 50ms should allow for some concurrency.

max-task-backlog

Sets the maximum allowed number of tasks in the backlog, after which I/O is ignored. Defaults to 1000. Although a continuously increasing backlog is a bad sign, depending on your workload’s nature (e.g., heavy ingestions for a few hours, then idle), it may make sense to increase the defaults.

idle-poll-time-us

During each poll round, define for how long the reactor will poll for tasks before going back to sleep. Defaults to 200 microseconds. Increasing this value causes the reactor to poll for longer at the expense of CPU utilization, whereas reducing it has the opposite effect.

poll-mode

Effectively disables the reactor from going to sleep at the expense of 100% CPU utilization all the time. Can be used to save some microseconds in polling latency.

Evaluating your disks

ScyllaDB ships iotune, a disk benchmarking tool (from Seastar) used to analyze your disk’s I/O profile and store its results in configuration files. The resulting files are then fed to the I/O scheduler during database initialization.

We also ship a wrapper around iotune named scylla_io_setup, tailored especially for ScyllaDB installations. The following output demonstrates a disk evaluation on a consumer-grade laptop:

$ sudo scylla_io_setup

Starting Evaluation. This may take a while...

Measuring sequential write bandwidth: 1761 MB/s (deviation 67%)

Measuring sequential read bandwidth: 5287 MB/s (deviation 7%)

Measuring random write IOPS: 9084 IOPS (deviation 64%)

Measuring random read IOPS: 56649 IOPS

Writing result to /etc/scylla.d/io_properties.yaml

Writing result to /etc/scylla.d/io.confscylla_io_setup output, note how both read IOPS and bandwidth exceed the writes by a good margin

As shown in the output, the evaluation results are going to be recorded in two files:

- /etc/scylla.d/io_properties.yaml – containing iotune’s measured values and;

- /etc/scylla.d/io.conf – Seastar I/O scheduling parameters. By default, points to the generated YAML file

In most ScyllaDB installations that’s all you need to do in order to tune your I/O scheduler. However, most people will simply “set and forget” these settings without accounting for aspects such as disks worn out over time, and ScyllaDB’s improvements to its I/O tuning logic. Given these aspects, it is recommended to re-execute the evaluation on a timely basis.

Trimming your disks

The trim command notifies the disks about which blocks of data it can safely discard. This helps the disk garbage-collection process, and avoids slowing down future writes to the involved blocks.

Frequently trimming your disks is generally a good idea. It can result in a great performance boost – especially for databases, where files are frequently being created, removed, and moved around.

ScyllaDB also comes with a trim utility named scylla_fstrim which is a wrapper to the fstrim(8) Linux command. By default, it is scheduled to run on a weekly basis. To enable it, simply run the scylla_fstrim_setup command and you should be all set.

Filesystem selection

Back in 2016, we explained the reasons why we have qualified XFS as the standard filesystem for ScyllaDB. Even though many years have passed and the support for AIO in other file systems has improved since, the decision to primarily support only XFS simplifies our testing, allowing us to shift focus to innovate other parts of the database.

In case you are wondering…yes, we ship with yet another wrapper to help you assemble your RAID array, as well as properly format it to XFS. The scylla_raid_setup command will take a comma-separated list of block devices and assemble them in a RAID-0 array (should you be using more than 1 disk). After, it will properly format the array as XFS and mount the filesystem for use.

As a bonus, by default the script will also configure a systemd.mount(5) unit to automatically mount your filesystem should you ever need to reboot your instances.

Storage selection

ScyllaDB’s I/O scheduler is a user space component responsible for fully maximizing your disk’s utilization while keeping your latencies as low as possible. The settings and parameters discussed in the previous section play a key role in ensuring that the scheduler performs accordingly to your disk’s measured capacity, and not more than that.

The disks you choose to use with your database directly impact both the throughput and latency expectations you should have for your workload. Therefore, let’s discuss some deployment options, starting with what we generally recommend, and then shifting to some best practices involved when you decide NOT to follow these recommendations.

If you read through our System Requirements page, you’ll see that we highly recommend using locally attached SSDs for your ScyllaDB deployment. When there are two or more disks, then these should be configured in a RAID-0 setup for higher performance.

The reasons for such a recommendation are simple: NVMe SSDs deliver lower latencies with less variability and can achieve higher bandwidth and IOPS when compared to other medium types, both of which are critical aspects for data-intensive applications. Given the increasing demand seeking for faster solutions, it also became an economically viable option, as prices have been constantly dropping ever since the NVMe specification was introduced over a decade ago.

If the thought of using locally attached SSDs gives you goosebumps, don’t worry! You are not alone and we are going to (hopefully) address all of your fears right here, right now.

Locally-attached disk mythbusting

Generally speaking, the resistance to running a database on top of locally attached disks typically comes down to the reliability aspect. Users initially worry because – especially under Cloud environments – when you lose a virtual machine with locally attached disks (due to a power loss, human error, etc.), you will typically lose all the data along with it. Similarly, the failure of a single disk in a RAID-0 setup is enough to corrupt the entire array.

ScyllaDB is a highly available database where data replication is built-in to the core of the product. When a properly configured cluster loses a node, the system will transparently rebalance traffic, without requiring operator intervention. This means that a disk loss alone won’t jeopardize a production workload. In fact, if you have been following all of our recommendations thoroughly, your deployment should be able to fully survive even a full zonal outage.

In addition to the reliability aspect, fairly often a common budgetary misconception also kicks in: Since NVMes are faster, then they must be more expensive than regular disks, right? Well… Wrong! As it turns out, locally attached NVMes are actually less expensive than network-attached SSDs! For example, under the GCP South Carolina (us-east1) region, the Network Attached SSD persistent disk costs $0.17 per GB, whereas a Local SSD costs $0.08 per GB. The calculation is a bit different under AWS since the NVMe SSD prices are included along with the instance cost. However, comparing instance prices along with EBS GP3 disk prices will likewise exhibit the same behavior.

Different storage types: software and hardware RAIDs

Despite the fact that NVMe SSDs deliver extremely low access latencies, you may have your own reasons not to stick with them. As far as our recommendations go, we want to ensure your workloads are able to sustain high throughputs under extremely low latencies for long periods. However, this may not be your need. In that case, you are free to experiment with alternatives.

Generally speaking, stick with a RAID-0 setup instead of other RAID types. We already spoke about resiliency, and learned that losing an entire array is not necessarily a problem. It is the database’s responsibility to replicate your data to other nodes, and using another RAID type (such as RAID-5) may introduce additional write overhead due to parity, further constraining your performance and providing very little benefits.

It is also common for some companies to make use of Hardware RAID controllers, with dedicated processors and write caches to cope with the performance overhead. Here, we start to dive into the unknown. We are not here to disparage specific vendors, but our experience working some RAID controllers revealed that – in some circumstances – the I/O performance would be better when the disks were directly attached to the guest operating system and being managed via mdadm(8) rather than directly via the RAID controller itself.

The reasons why the performance of some controllers was suboptimal in some of our experiments go beyond what we aim to achieve in this write-up, but in some cases it was related to the way the RAID controller supported asynchronous Direct IO calls, the access method used by ScyllaDB.

Improving write performance

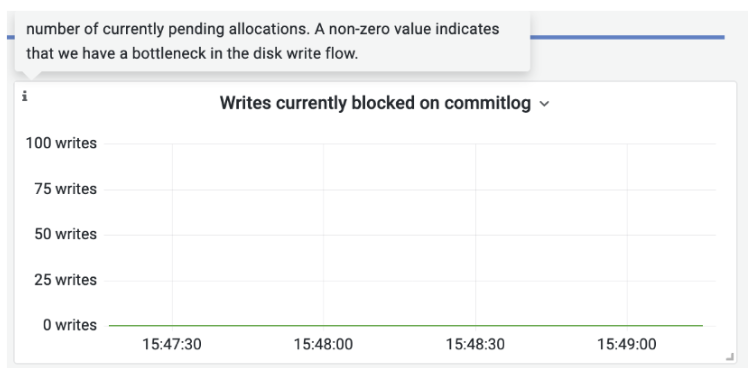

Whether you are admittedly using a slow disk or you think you are running on a fast disk which is actually slow, you’ll eventually realize your latencies could have been better. The ScyllaDB Monitoring stack makes it easy to spot related problems when your disks are not able to cope with your write throughput.

Writes currently blocked on commitlog panel, over the Detailed dashboard

In general, you’ll always want to ensure that this panel metrics is always zero. If that’s not the case, you could mount a different disk (preferably with improved write IOPS and bandwidth) in ScyllaDB’s commitlog directory (defaults to /var/lib/scylla/commitlog).

What’s next?

Selecting the right infrastructure for your ScyllaDB deployments is a crucial aspect towards success. Along with the first part of this series, we hope to have shed light on the considerations you need to take into account during your deployment.

Now that we’re past the infrastructure selection part, let’s shift our focus to actually deploying ScyllaDB! Stay tuned!