How data is replicated to support low latency for ZeroFlucs’ global usage patterns – without racking up unnecessary costs

ZeroFlucs’ business – processing sports betting data – is rather latency sensitive. Content must be processed in near real-time, constantly, and in a region local to both the customer and the data. And there’s incredibly high throughput and concurrency requirements – events can update dozens of times per minute and each one of those updates triggers tens of thousands of new simulations (they process ~250,000 in-game events per second).

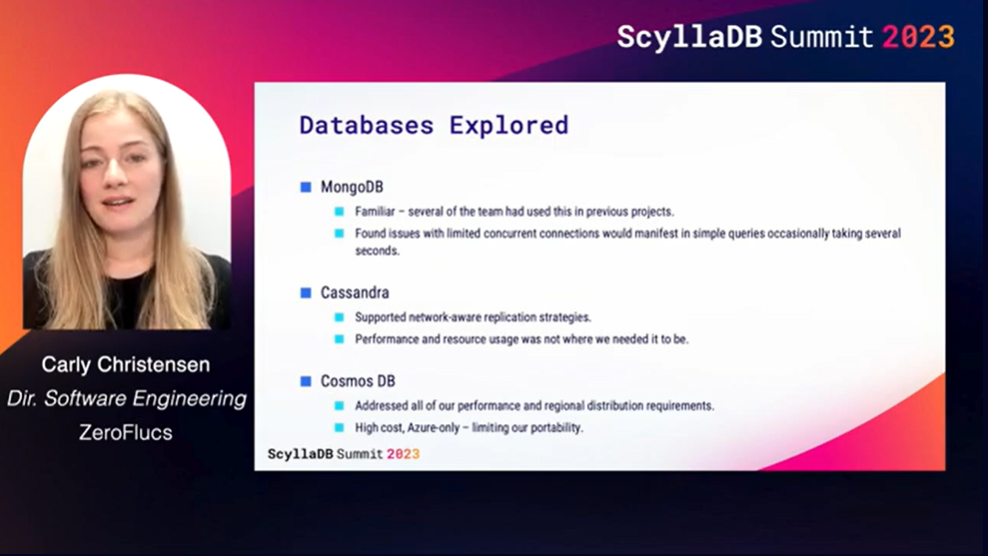

At ScyllaDB Summit 23, ZeroFlucs’ Director of Software Engineering Carly Christensen walked attendees through how ZeroFlucs uses ScyllaDB to provide optimized data storage local to the customer – including how their recently open-sourced package (cleverly named Charybdis) facilitates this. This blog post, based on that talk, shares their brilliant approach to figuring out exactly how data should be replicated to support low latency for their global usage patterns without racking up unnecessary storage costs.

Join us at ScyllaDB Summit 24 to hear more firsthand accounts of how teams are tackling their toughest database challenges. Disney, Discord, Expedia, Fanatics, Paramount, and more are all on the agenda.

Update: ScyllaDB Summit 2024 is now a wrap!

Access ScyllaDB Summit On Demand

What’s ZeroFlucs?

First, a little background on the business challenges that the ZeroFlucs technology is supporting. ZeroFlucs’ same-game pricing lets sports enthusiasts bet on multiple correlated outcomes within a single game. This is leagues beyond traditional bets on what team will win a game and by what spread. Here, customers are encouraged to design and test sophisticated game theories involving interconnected outcomes within the game. As a result, placing a bet is complex, and there’s a lot more at stake as the live event unfolds.

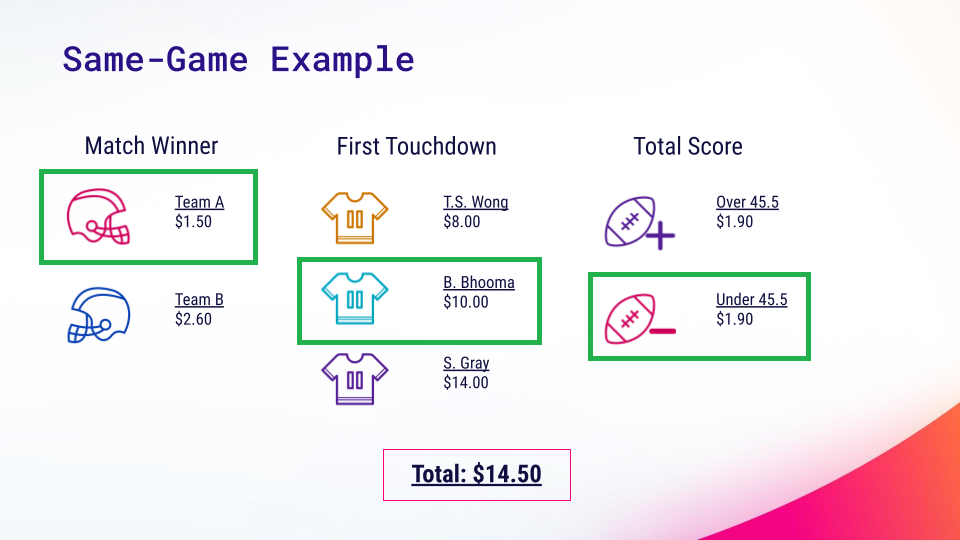

For example, assume there are three “markets” for bets:

- Will Team A or Team B win?

- Which player will score the first touchdown?

- Will the combined scores of Team A and B be over or under 45.5 points?

Someone could place a bet on team A to win, B. Bhooma to score the first touchdown, and for the total score to be under 45.5 points. If you look at those 3 outcomes and multiply the prices together, you get a price of around $28. But in this case, the correct price is approximately $14.50.

Carly explains why. “It’s because these are correlated outcomes. So, we need to use a simulation-based approach to more effectively model the relationships between those outcomes. If a team wins, it’s much more likely that they will score the first touchdown or any other touchdown in that match. So, we run simulations, and each simulation models a game end-to-end, play-by-play. We run tens of thousands of these simulations to ensure that we cover as much of the probability space as possible.”

The ZeroFlucs Architecture

The ZeroFlucs platform was designed from the ground up to be cloud native. Their software stack runs on Kubernetes, using Oracle Container Engine for Kubernetes. There are 130+ microservices, growing every week. And a lot of their environment can be managed through custom resource definitions (CRDs) and operators. As Carly explains, “For example, if we want to add a new sport, we just define a new instance of that resource type and deploy that YAML file out to all of our clusters.” A few more tech details:

- Services are primarily Golang

- Python is used for modeling and simulation services

- GRPC is used for internal communications

- Kafka is used for “at least once” delivery of all incoming and outgoing updates

- GraphQL is used for external-facing APIs

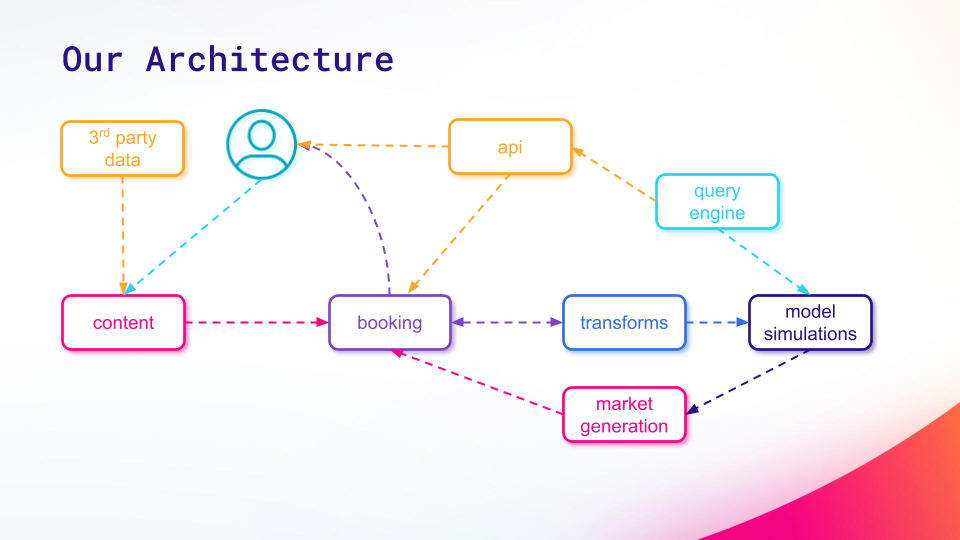

As the diagram above shows:

- Multiple third-party sources send content feeds.

- Those content items are combined into booking events, which are then used for model simulations.

- The simulation results are used to generate hundreds to thousands of new markets (specific outcomes that can be bet on), which are then stored back on the original booking event.

- Customers can interact directly with that booking event. Or, they can use the ZeroFlucs API to request prices for custom combinations of outcomes via the ZeroFlucs query engine. Those queries are answered with stored results from their simulations.

Any content update starts the entire process over again.

Keeping Pace with Live In-Play Events

ZeroFlucs’ ultimate goal is to process and simulate events fast enough to offer same-game prices for live in-play events. For example, they need to predict whether this play results in a touchdown and which player will score the next touchdown – and they must do it fast enough to provide the prices before the play is completed. There are two main challenges to accomplishing this:

- High throughput and concurrency. Events can update dozens of times per minute, and each update triggers tens of thousands of new simulations (hundreds of megabytes of data). They’re currently processing about 250,000 in-game events per second.

- Customers can be located anywhere in the world. That means ZeroFlucs must be able to place their services — and the associated data – near these customers. With each request passing through many microservices, even a small increase in latency between those services and the database can result in a major impact on the total end-to-end processing time.

Selecting a Database That’s Up to the Task

Carly and team initially explored whether three popular databases might meet their needs here.

- MongoDB was familiar to many team members. However, they discovered that with a high number of concurrent queries, some queries took several seconds to complete.

- Cassandra supported network-aware replication strategies, but its performance and resource usage fell short of their requirements.

- CosmosDB addressed all their performance and regional distribution needs, but its high cost and Azure-only availability posed limitations on their portability. But they couldn’t justify its high cost, or the vendor lock-in.

Then they thought about ScyllaDB, a database they had discovered while working on a different project. It didn’t make sense for the earlier use case, but it met this project’s requirements quite nicely. As Carly put it: “ScyllaDB supported the distributed architecture that we needed, so we could locate our data replicas near our services and our customers to ensure that they always had low latency. It also supported the high throughput and concurrency that we required. We haven’t yet found a situation that we couldn’t just scale through. ScyllaDB was also easy to adopt. Using ScyllaDB Operator, we didn’t need a lot of domain knowledge to get started.”

Inside their ScyllaDB Architecture

ZeroFlucs is currently using ScyllaDB hosted on Oracle Cloud Flex 4 VMs. These VMs allow them to change the CPU and memory allocation to those nodes if needed. It’s currently performing well, but the company’s throughput increases with every new customer. That’s why they appreciate being able to scale up and run on bare metal if needed in the future.

They’re already using ScyllaDB Operator to manage ScyllaDB, and they were reviewing their strategy around ScyllaDB Manager and ScyllaDB Monitoring at the time of the talk.

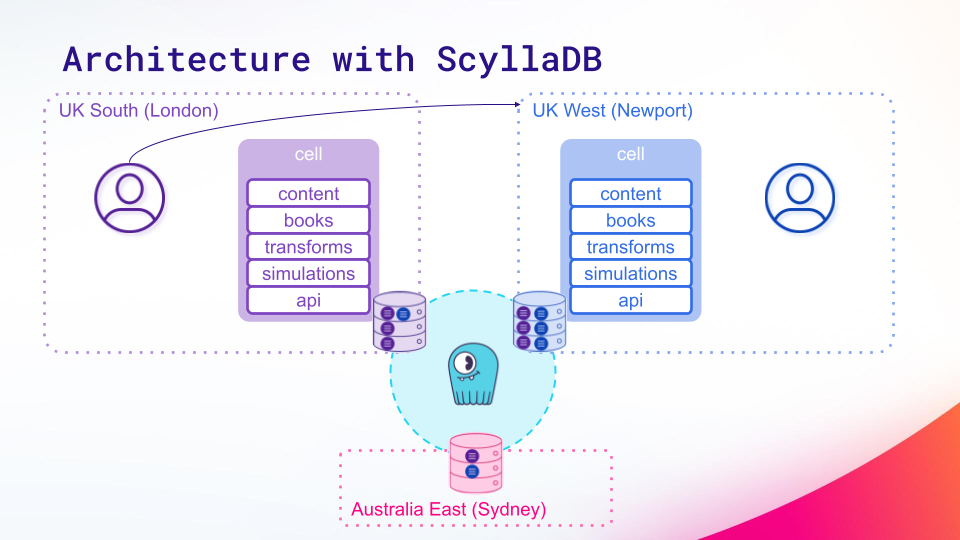

Ensuring Data is Local to Customers

To make the most of ScyllaDB, ZeroFlucs divided their data into three main categories:

- Global data. This is slow-changing data used by all their customers. It’s replicated to each and every one of their regions.

- Regional data. This is data that’s used by multiple customers in a single region (for example, a sports feed). If a customer in another region requires their data, they separately replicate it into that region.

- Customer data. This is data that is specific to that customer, such as their booked events or their simulation results. Each customer has a home region where multiple replicas of their data are stored. ZeroFlucs also keeps additional copies of their data in other agents that they can use for disaster recovery purposes.

Carly shared an example: “Just to illustrate that idea, let’s say we have a customer in London. We will place a copy of our services (“a cell”) into that region. And all of that customer’s interactions will be contained in that region, ensuring that they always have low latency. We’ll place multiple replicas of their data in that region. And will also place additional replicas of their data in other regions. This becomes important later.”

Now assume there’s a customer in the Newport region. They would place a cell of their services there, and all of that customer’s interactions would be contained within the Newport region so they also have low latency.

Carly continues, “If the London data center becomes unavailable, we can redirect that customer’s requests to the Newport region. And although they would have increased latency on the first hop of those requests, the rest of the processing is still contained within one data center – so it would still be low latency.” With a complete outage for that customer averted, ZeroFlucs would then increase the number of replicas of their data in that region to restore data resiliency for them.

Between Scylla(DB) and Charybdis

ZeroFlucs separates data into services and keyspaces, with each service using at least one keyspace. Global data has just one keyspace, regional data has a keyspace per region, and customer data has a keyspace per customer. Some services can have more than one data type, and thus might have both a global keyspace as well as customer keyspaces.

They needed a simple way to manage the orchestration and updating of keyspaces across all their services. Enter Charybdis, the Golang ScyllaDB helper library that the ZeroFlucs team created and open sourced. Charybdis features a table manager that will automatically create keyspaces as well as add tables, columns, and indexes. It offers simplified functions for CRUD-style operations, and it supports LWT and TTL.

Note: For an in-depth look at the design decisions behind Charydbis, see this blog by ZeroFlucs Founder and CEO Steve Gray.

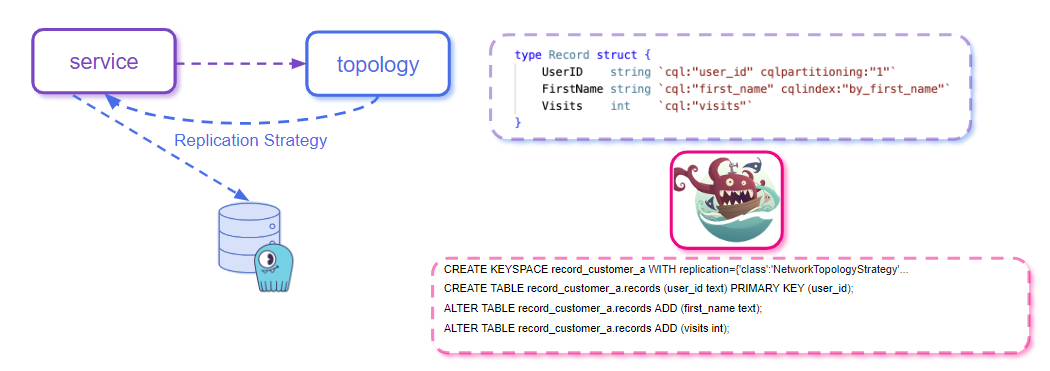

There’s also a topology Controller Service that’s responsible for managing the replication settings and keyspace information related to every service.

Upon startup, the service calls the topology controller and retrieves its replication settings. It then combines that data with its table definitions and uses it to maintain its keyspaces in ScyllaDB. The above image shows sample Charybdis-generated DDL statements that include a network topology strategy.

Next on their Odyssey

Carly concluded: “We still have a lot to learn, and we’re really early in our journey. For example, our initial attempt at dynamic keyspace creation caused some timeouts between our services, especially if it was the first request for that instance of the service. And there are still many Scylla DB settings that we have yet to explore. I’m sure that we’ll be able to increase our performance and get even more out of Scylla DB in the future.”

Watch the Complete Tech Talk

You can watch Carly’s complete tech talk and skim through her deck in our tech talk library.