Dr. Daniel Abadi (University of Maryland) and Kostja Osipov (ScyllaDB) discuss PACELC, CAP theorem, Raft, and Paxos

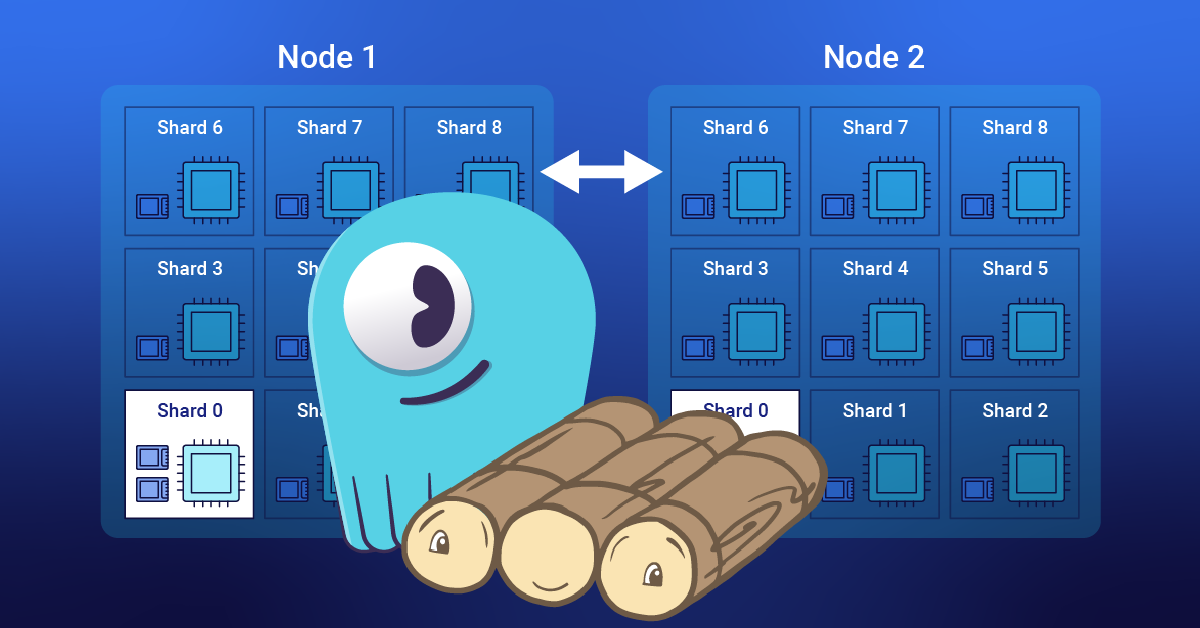

Database consistency has been a strongly consistent theme at ScyllaDB Summit over the past few years – and we guarantee that will continue at ScyllaDB Summit 2024 (free + virtual). Co-founder Dor Laor’s opening keynote on “ScyllaDB Leaps Forward” includes an overview of the latest milestones on ScyllaDB’s path to immediate consistency. Kostja Osipov (Director of Engineering) then shares the details behind how we’re implementing this shift with Raft and what the new consistent metadata management updates mean for users. Then on Day 2, Avi Kivity (Co-founder) picks up this thread in his keynote introducing ScyllaDB’s revolutionary new tablet architecture – which is built on the foundation of Raft.

Update: ScyllaDB Summit 2024 is now a wrap!

Access ScyllaDB Summit On Demand

ScyllaDB Summit 2023 featured two talks on database consistency. Kostja Osipov shared a preview of Raft After ScyllaDB 5.2: Safe Topology Changes (also covered in this blog series). And Dr. Daniel Abadi, creator of the PACELC theorem, explored The Consistency vs Throughput Tradeoff in Distributed Databases.

After their talks, Daniel and Kostja got together to chat about distributed database consistency. You can watch the full discussion below.

Here are some key moments from the chat…

What is the CAP theorem and what is PACELC

Daniel: Let’s start with the CAP theorem. That’s the more well-known one, and that’s the one that came first historically. Some say it’s a three-way tradeoff, some say it’s a two-way tradeoff. It was originally described as a three-way tradeoff: out of consistency, availability, and tolerance to network partitions, you can have two of them, but not all three. That’s the way it’s defined. The intuition is that if you have a copy of your data in America and a copy of your data in Europe and you want to do a write in one of those two locations, you have two choices.

You do it in America, and then you say it’s done before it gets to Europe. Or, you wait for it to get to Europe, and then you wait for it to occur there before you say that it’s done. In the first case, if you commit and finish a transaction before it gets to Europe, then you’re giving up consistency because the value in Europe is not the most current value (the current value is the write that happened in America). But if America goes down, you could at least respond with stale data from Europe to maintain availabilty.

PACELC is really an extension of the CAP theorem. The PAC of PACELC is CAP. Basically, that’s saying that when there is a network partition, you must choose either availability or consistency. But the key point of PACELC is that network partitions are extremely rare. There’s all kinds of redundant ways to get a message from point A to point B.

So the CAP theorem is kind of interesting in theory, but in practice, there’s no real reason why you have to give up C or A. You can have both in most cases because there’s never a network partition. Yet we see many systems that do give up on consistency. Why? The main reason why you give up on consistency these days is latency. Consistency just takes time. Consistency requires coordination. You have to have two different locations communicate with each other to be able to remain consistent with one another. If you want consistency, that’s great. But you have to pay in latency. And if you don’t want to pay that latency cost, you’re going to pay in consistency. So the high-level explanation of the PACELC theorem is that when there is a partition, you have to choose between availability and consistency. But in the common case where there is no partition, you have to choose between latency and consistency.

[Read more in Dr. Abadi’s paper, Consistency Tradeoffs in Modern Distributed Database System Design]

In ScyllaDB, when we talk about consensus protocols, there’s Paxos and Raft. What’s the purpose for each?

Kostja: First, I would like to second what Dr. Abadi said. This is a tradeoff between latency and consistency. Consistency requires latency, basically. My take on the CAP theorem is that it was really oversold back in the 2010s. We were looking at this as a fundamental requirement, and we have been building systems as if we are never going to go back to strong consistency again. And now the train has turned around completely. Now many vendors are adding back strong consistency features.

For ScyllaDB, I’d say the biggest difference between Paxos and Raft is whether it’s a centralized algorithm or a decentralized algorithm. I think decentralized algorithms are just generally much harder to reason about. We use Raft for configuration changes, which we use as a basis for our topology changes (when we need the cluster to agree on a single state). The main reason we chose Raft was that it has been very well specified, very well tested and implemented, and so on. Paxos itself is not a multi-round protocol. You have to build on top of it; there are papers on how to build multi-Paxos on top of Paxos and how you manage configurations on top of that. If you are a practitioner, you need some very complete thing to build upon. Even when we were looking at Raft, we found quite a few open issues with the spec. That’s why both can co-exist. And I guess, we also have eventual consistency – so we could take the best of all worlds.

For data, we are certainly going to run multiple Raft groups. But this means that every partition is going to be its own consensus – running independently, essentially having its own leader. In the end, we’re going to have, logically, many leaders in the cluster. However, if you look at our schema and topology, there’s still a single leader. So for schema and topology, we have all of the members of the cluster in the same group. We do run a single leader, but this is an advantage because the topology state machine is itself quite complicated. Running in a decentralized fashion without a single leader would complicate it quite a bit more. For a layman, linearizable just means that you can very easily reason about what’s going on: one thing happens after another. And when you build algorithms, that’s a huge value. We build complex transitions of topology when you stream data from one node to another – you might need to abort this, you might need to coordinate it with another streaming operation, and having one central place to coordinate this is just much, much easier to reason about.

Daniel: Returning to what Kostja was saying. It’s not just that the trend (away from consistency) has started reverse script. I think it’s very true that people overreacted to CAP. It’s sort of like they used CAP as an excuse for why they didn’t create a consistent system. I think there are probably more systems than there should have been that might have been designed very differently if they didn’t drink the CAP Kool-aid so much. I think it’s a shame, and as Kostja said, it’s starting to reverse now.

Daniel and Kostja on Industry Shifts

Daniel: We are seeing sort of a lot of systems now, giving you the best of both worlds. You don’t want to do consistency at the application level. You really want to have a database that can take care of the consistency for you. It can often do it faster than the application can deal with it. Also, you see bugs coming up all the time in the application layer. It’s hard to get all those corner cases right. It’s not impossible but it’s just so hard. In many cases, it’s just worth paying the cost to get the consistency guaranteed in the system and be working with a rock-solid system. On the other hand, sometimes you need performance. Sometimes users can’t tolerate 20 milliseconds – it’s just too long. Sometimes you don’t need consistency. It makes sense to have both options. ScyllaDB is one example of this, and there are also other systems providing options for users. I think it’s a good thing.

Kostja: I want to say more about the complexity problem. There was this research study on Ruby on Rails, Python, and Go applications, looking at how they actually use strongly consistent databases and different consistency levels that are in the SQL standard. It discovered that most of the applications have potential issues simply because they use the default settings for transactional databases, like snapshot isolation and not serializable isolation. Applied complexity has to be taken into account. Building applications is more difficult and even more diverse than building databases. So you have to push the problem down to the database layer and provide strong consistency in the database layer to make all the data layers simpler. It makes a lot of sense.

Daniel: Yes, that was Peter Bailis’ 2015 UC Berkeley Ph.D. thesis, Coordination Avoidance in Distributed Databases. Very nice comparison. What I was saying was that they know what they’re getting, at least, and they just tried to design around it and they hit bugs. But what you’re saying is even worse: they don’t even know what they’re getting into. They’re just using the defaults and not getting full isolation and not getting full consistency – and they don’t even know what happened.

Continuing the Database Consistency Conversation

Intrigued by database consistency? Here are some places to learn more:

- Join us at ScyllaDB Summit, especially for Kostja’s tech talk on Day 1

- Look at Daniel’s articles and talks

- Look at Kostja’s articles and talks